Quickstart#

Cornac is a Python library for building and training recommendation models. It focuses on making it convenient to work with models leveraging auxiliary data (e.g., item descriptive text and image, social network, etc).

Cornac enables fast experiments and straightforward implementations of new models. It is highly compatible with existing machine learning libraries (e.g., TensorFlow, PyTorch).

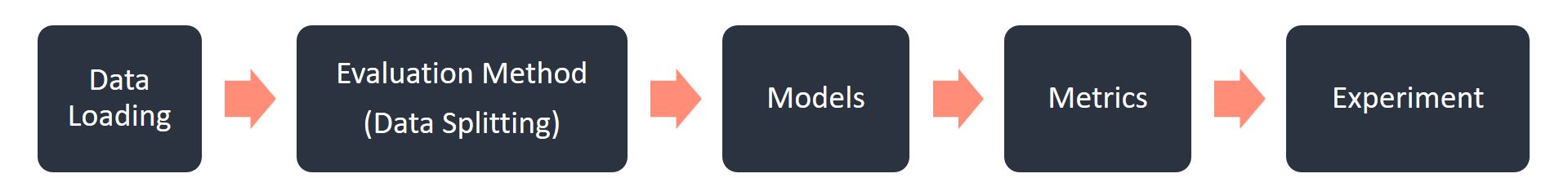

The Cornac Experiment Concept#

The main idea behind Cornac is to provide a simple and flexible way to experiment with different models, datasets and metrics without having to manually implement and run all the code yourself.

Here are some key concepts related to Cornac:

A dataset refers to a specific collection of input data that is used to train or test an algorithm.

A model refers to a specific (machine learning) algorithm that is used to train on a dataset to learn user preferences and make recommendations.

An evaluation metric refers to a specific performance measure or score that is being used to evaluate or compare different models during the experimentation process.

An experiment is one-stop-shop where you manage how your dataset should be prepared/split, different evaluation metrics, and multiple models to be compared with.

The First Experiment#

In today’s world of countless movies and TV shows at our fingertips, finding what we truly enjoy can be a challenge.

This experiment focuses on how we could utilize a recommender system to provide us with personalized recommendations based on our preferences.

About the MovieLens dataset#

The MovieLens dataset, a repository of movie ratings and user preferences, remains highly relevant today. Oftentimes, it is used as a benchmark to compare different recommendation algorithms.

Sample data from MovieLens 100K dataset#

The MovieLens 100K dataset contains 100,000 ratings from 943 users on 1,682 movies. Each user has rated at least 20 movies on a scale of 1 to 5.

The dataset also contains additional information about the movies, such as genre and year of release.

user_id |

item_id |

rating |

|

|---|---|---|---|

0 |

196 |

242 |

3.0 |

1 |

186 |

302 |

3.0 |

2 |

22 |

377 |

1.0 |

3 |

244 |

51 |

2.0 |

4 |

166 |

346 |

1.0 |

A sample of 5 records from the MovieLens 100K dataset is shown above.

The Experiment#

Note

This tutorial assumes that you have already installed Cornac. If you have not done so, please refer to the installation guide in the documentation.

See Installation.

In this experiment, we will be using the MovieLens 100K dataset to train and evaluate a recommender system that can predict how a user would rate a movie based on their preferences learned from past ratings.

1. Data Loading#

Create a python file called first_experiment.py and add the following code

into it:

import cornac

# Load a sample dataset (e.g., MovieLens)

ml_100k = cornac.datasets.movielens.load_feedback()

In the above code, we define a variable ml_100k that loads the

MovieLens 100K dataset.

MovieLens is one of the many datasets available on Cornac for use. View the other datasets available in Built-in Datasets.

2. Data Splitting#

We need to split the data into training and testing sets. A common way to do this is to do it based on a specified ratio (e.g., 80% training, 20% testing).

A training set is used to train the model, while a testing set is used to evaluate the model’s performance.

from cornac.eval_methods import RatioSplit

# Split the data into training and testing sets

rs = RatioSplit(data=ml_100k, test_size=0.2, rating_threshold=4.0, seed=123)

In this example, we set various parameters for the RatioSplit object:

test_size=0.2to split the data into 80% training and 20% testing.data=ml_100kto use the MovieLens 100K dataset.rating_threshold=4.0to only consider ratings that are greater than or equal to 4.0 to be positive ratings. Everything else will be considered as something that the user dislikes.seed=123to ensure that the results are reproducible. Setting a seed to a specific value will always produce the same results.

3. Define Model#

We need to define a model to train and evaluate. In this example, we will be using the Bayesian Personalized Ranking (BPR) model.

from cornac.models import BPR

# Instantiate a recommender model (e.g., BPR)

models = [

BPR(k=10, max_iter=200, learning_rate=0.001, lambda_reg=0.01, seed=123),

]

We set various parameters for the BPR object:

k=10to set the number of latent factors to 10. This means that each user and item will be represented by a vector of 10 numbers.max_iter=200to set the maximum number of iterations to 200. This means that the model will be trained for a maximum of 200 iterations.learning_rate=0.001to set the learning rate to 0.001. This controls how much the model will learn from each iteration.lambda_reg=0.01to set the regularization parameter to 0.01. This controls how much the model will penalize large values in the user and item vectors.seed=123to ensure that the results are reproducible. Setting a seed to a specific value will always produce the same results. This is the same seed that we used for theRatioSplitobject.

4. Define Metrics#

We need to define metrics to evaluate the model. In this example, we will be using the Precision, Recall metrics.

from cornac.metrics import Precision, Recall

# Define metrics to evaluate the models

metrics = [Precision(k=10), Recall(k=10)]

We set various metrics for the metrics object:

The Precision metric measures the proportion of recommended items that are relevant to the user. The higher the Precision, the better the model.

The Recall metric measures the proportion of relevant items that are recommended to the user. The higher the Recall, the better the model.

Note

Certain metrics like Precision and Recall are ranking based. This requires a specific number of recommendations to be made in order to calculate the metric.

In this example, these calculations will be done based on

10 recommendations for each user. (k=10)

5. Run Experiment#

We can now run the experiment by putting everything together. This will train the model and evaluate its performance based on the metrics that we defined.

# Put it together in an experiment, voilà!

cornac.Experiment(eval_method=rs, models=models, metrics=metrics, user_based=True).run()

We set various parameters for the Experiment object:

eval_method=rsto use theRatioSplitobject that we defined earlier.models=modelsto use theBPRmodel that we defined earlier.metrics=metricsto use thePrecision, andRecallmetrics that we defined earlier.user_based=Trueto evaluate the model on an individual user basis. This means that the average performance of each user will be calculated and averaged across users to get the final result (users are weighted equally). This is opposed to evaluating based on all ratings by settinguser_based=false.

View codes at this point

1import cornac

2from cornac.eval_methods import RatioSplit

3from cornac.models import BPR

4from cornac.metrics import Precision, Recall

5

6# Load a sample dataset (e.g., MovieLens)

7ml_100k = cornac.datasets.movielens.load_feedback()

8

9# Split the data into training and testing sets

10rs = RatioSplit(data=ml_100k, test_size=0.2, rating_threshold=4.0, seed=123)

11

12# Instantiate a matrix factorization model (e.g., BPR)

13models = [

14 BPR(k=10, max_iter=200, learning_rate=0.001, lambda_reg=0.01, seed=123),

15]

16

17# Define metrics to evaluate the models

18metrics = [Precision(k=10), Recall(k=10)]

19

20# Put it together in an experiment, voilà!

21cornac.Experiment(eval_method=rs, models=models, metrics=metrics, user_based=True).run()

Run the python codes#

Finally, run the python codes you have just written by entering this into your favourite command prompt.

python first_experiment.py

What does the output mean?#

TEST:

...

| Precision@10 | Recall@10 | Train (s) | Test (s)

--- + ------------ + --------- + --------- + --------

BPR | 0.1110 | 0.1195 | 4.7624 | 0.7182

After the training process, Cornac tests the trained model by using the test data

(as split by the RatioSplit function) to calculate the metrics defined.

Over in the screenshot below, we see the results for the

Precision@10 (k=10) and Recall@10 (k=10) respectively.

Also, we see the time taken for Cornac to train, and time taken evaluate the test data.

Adding More Models#

In many of the times, we may want to consider adding more models so that we can compare results accordingly.

Let’s add a second model called the Probabilistic Matrix Factorization (PMF) model. We add the following codes to our models variable:

from cornac.models import BPR, PMF

# Instantiate a matrix factorization model (e.g., BPR, PMF)

models = [

BPR(k=10, max_iter=200, learning_rate=0.001, lambda_reg=0.01, seed=123),

PMF(k=10, max_iter=100, learning_rate=0.001, lambda_reg=0.001, seed=123),

]

View codes at this point

1import cornac

2from cornac.eval_methods import RatioSplit

3from cornac.models import BPR, PMF

4from cornac.metrics import Precision, Recall

5

6# Load a sample dataset (e.g., MovieLens)

7ml_100k = cornac.datasets.movielens.load_feedback()

8

9# Split the data into training and testing sets

10rs = RatioSplit(data=ml_100k, test_size=0.2, rating_threshold=4.0, seed=123)

11

12# Instantiate a matrix factorization model (e.g., BPR, PMF)

13models = [

14 BPR(k=10, max_iter=200, learning_rate=0.001, lambda_reg=0.01, seed=123),

15 PMF(k=10, max_iter=100, learning_rate=0.001, lambda_reg=0.001, seed=123),

16]

17

18# Define metrics to evaluate the models

19metrics = [Precision(k=10), Recall(k=10)]

20

21# Put it together in an experiment, voilà!

22cornac.Experiment(eval_method=rs, models=models, metrics=metrics, user_based=True).run()

Now run it again!

python first_experiment.py

TEST:

...

| Precision@10 | Recall@10 | Train (s) | Test (s)

--- + ------------ + --------- + --------- + --------

BPR | 0.1110 | 0.1195 | 4.7624 | 0.7182

PMF | 0.0813 | 0.0639 | 2.5635 | 0.4254

We are now presented with results from our different models. In this easy example, we can see how we can easily compare the results from different models.

Depending on the results of the metrics, time taken for training and evaluation, we can then further tweak the parameters, and also decide which model to use for our application.